Content from NCBI

Last updated on 2026-02-10 | Edit this page

Exercise 1: Global Cross-database NCBI Search

Overview

Questions

Exercise 1 - How do you efficiently and reliably navigate NCBI’s website?

Exercise 2 - How do you search sequences? - How do you use the right BLAST flavor?

Objectives

Exercise 1 - Familiarize yourself with the differences NCBI databases - Browse and search the databases, using cross-links

Exercise 2 - Use and understand blast alignment tools - Characterize sequences - Understand genomic context and synteny

This part is to encourage you to explore NCBI resources. Questions are examples or real-life questions that you might ask yourself later. There are not necessarily exactly one solution to the question.

Start by going to NCBI’s web site, main search page: https://www.ncbi.nlm.nih.gov/search/. There, you have the list of most of NCBI’s databases, sorted by category. Take some time to explore the following sections: Literature, Genomes, Genes and Proteins.

Task 1.1: Find publications (this is a warm up!)

You want to find the following articles:

- an article about Escherichia coli O104:H4 published by Matthew K Waldor in the New England Journal of Medicine; and

- a paper that has Escherichia coli in the title, whose last author is L. Wang, and was published in PLoS ONE in 2014.

NOTE: you do not know the exact title, list of authors, PMID etc. Use your search skills (solution provided below). There may be more than one result.

Challenge 1.1.1

Find the two articles above using NCBI’s literature database.

Use the advanced search from PubMed, by clicking on “Advanced” (tutorial https://www.ncbi.nlm.nih.gov/books/NBK3827/#pubmedhelp.PubMed_Quick_Start), to build an exact search. Use the different fields to build a search that returns only a single match. In particular, look at the Search field tags.

Use search terms with search tags (“search term”[searchTag]“)and combine them with Boolean operators (”AND”).

Useful search tags: Author [au], Date of publication [dp], Journal [ta], Last author [lastau], Title [ti].

- Waldor[au] AND “Escherichia coli O104:H4” AND NEJM[ta]

- “Wang L”[lastau] AND “Escherichia coli”[ti] AND 2014[dp] and “PLOS One”[ta].

Challenge 1.1.2

Now try to find the most recent paper citing the first article by Rasko et al above. Compare the use of

- NCBI (“Cited by” link) and

- Google Scholar (https://scholar.google.com) (the language settings of Google Scholar can be adjusted in the menu under Settings) and

- Web of Knowledge (https://www.webofknowledge.com; Access from UU: https://www.ub.uu.se/ > Databases A-Z > W > Web of Science Core Collection).

Then:

- How did the two search engines compare?

- Which one was easier to work with dates?

- Which one returned most results?

- Which one has more complete information?

Task 1.2: Find Gene records

You want to find sequences for the subunit A of the Shiga toxin in E. coli O157:H7 Sakai. Try to search in the three following databases: Gene, Nucleotide and Protein.

Challenge 1.2.1

What do the three different databases contain? What information do you get from them?

NCBI is not as smart as Google, and copy/pasting might fail. Try spelling out Escherichia coli and removing parts of the search text.

If you get too many hits, go to the field “Search details”, and refine your search, using the [Organism] field, for example. You don’t need to get exactly one hit or to get the result you want on top. By default, the search will include everything that has the species name anywhere in the record.

Nucleotide database contains mostly genome records, so the Shiga toxin gene might be anywhere on these contigs/genomes.

Challenge 1.2.2

What do the five top results from the Protein database show?

Task 1.3: Understand Gene records

Click on a Gene search result from Task 1.2 and skim through the entire page.

Challenge 1.3.1

Who published the sequence? When? Is there a paper?

There is often a “Bibliography” section. There is also often a “PubMed” link under “Related Information” in the right menu.

Challenge 1.3.2

What is known about the gene? Does the record include taxonomy information?

The taxonomic information is under the “Summary” section, under “Organism” and “Lineage”.

Click on the Organism link and go to the Taxonomy Browser.

Challenge 1.3.3

What can you learn about species taxonomy there? What other useful information is given on this page?

Back to the Gene page, have a look at the “Related information” section in the right column.

Challenge 1.3.4

Where can you go from there?

All the information in that section (“Related information”) is coming

through the Entrez system. In GenBank records, look for

\dbxref (database cross-reference) which provides the

Entrez links.

Task 1.4: Understanding Protein records and sequences

Click on the Protein link for this gene. Look at the content of one of the records.

Challenge 1.4.1

What can you learn here? What information do you find here? What is the name of this format? Are there any known protein domains in this protein? Can you identify a link to these?

You can change the format by clicking on the top left corner.

Look also for “Related information” in the right menu and for the

/db_xref links in the main file.

Challenge 1.4.2

Can you find only the sequence information in FASTA

format? How is the format organized? What is included in the header?

Discuss the advantages of the two formats. Can you find a definition of

both formats on the NCBI website or elsewhere?

If you are stuck, here is the link to

- one Gene record: https://www.ncbi.nlm.nih.gov/gene/916678

- one Protein record: https://www.ncbi.nlm.nih.gov/protein/NP_311001.1

Challenge 1.4.3

Can you find a 3D protein structure for this protein?

Not all proteins have 3D structures. If your protein doesn’t have one, try the following Shiga toxin:

In the right menu, under “Related Information”, there may be a “Related Structures” link.

Challenge 1.4.4

Can you display the genome record on which the gene is encoded?

If you lost the gene page, here is another one:

There are several ways to display the GenBank file. - In the “Genomic regions, transcripts, and products” section, there is a link to the GenBank record - There is another one in the “Related sequences” section (column “Accession and Version”).

Exercise 2: Sequence alignments

In this exericse, you will use the sequence alignment tool BLAST to align and retrieve sequences.

NOTE: the NCBI blast service can be under very heavy load (especially during daytime in the US), and don’t be surprised if a single blast takes >10 minutes. As an alternative, you can use the BLAST service at the EBI website (http://www.ebi.ac.uk). Note that the EBI doesn’t have the same level of tool integration as NCBI, and some parts of the questions might be more challenging to answer with the EBI tools.

In general, try reduce the load on the NCBI server and consider

smaller databases than the standard nr/nt

ones, for example refseq_select or

refseq_protein: under the “Choose Search Set” heading on

the Blast search page, you can choose the right “Database”.

Task 2.1: Find nucleotide blast hits

Find the NCBI blast main page.

Challenge 2.1.1

- What is BLAST?

- What different kinds of BLAST searches are there?

- What is blastn? blastx? blastp? tblastn?

See the different flavors of BLAST: https://blast.ncbi.nlm.nih.gov/doc/blast-quick-start-guide/

Find sequence hits for the following sequence, but first consider the options available on the BLAST page. One option that might come in very handy is the “open results in another window” option, which will allow you to modify your query and rerun it quickly without losing the results:

>a

GATTACTTCAGCCAAAAGGAACACCTGTATATGAAGTGTATATTATTTAAATGGGTACTG

TGCCTGTTACTGGGTTTTTCTTCGGTATCCTATTCCCGGGAGTTTACGATAGACTTTTCG

ACCCAACAAAGTTATGTCTCTTCGTTAAATAGTATACGGACAGAGATATCGACCCCTCTT

GAACATATATCTCAGGGGACCACATCGGTGTCTGTTATTAACCACACCCCACCGGGCAGT

TATTTTGCTGTGGATATACGAGGGCTTGATGTCTATCAGGCGCGTTTTGACCATCTTCGT

CTGATTATTGAGCAAAATAATTTATATGTGGCCGGGTTCGTTAATACGGCAACAAATACT

TTCTACCGTTTTTCAGATTTTACACATATATCAGTGCCCGGTGTGACAACGGTTTCCATG

ACAACGGACAGCAGTTATACCACTCTGCAACGTGTCGCAGCGCTGGAACGTTCCGGAATG

CAAATCAGTCGTCACTCACTGGTTTCATCATATCTGGCGTTAATGGAGTTCAGTGGTAAT

ACAATGACCAGAGATGCATCCAGAGCAGTTCTGCGTTTTGTCACTGTCACAGCAGAAGCC

TTACGCTTCAGGCAGATACAGAGAGAATTTCGTCAGGCACTGTCTGAAACTGCTCCTGTG

TATACGATGACGCCGGGAGACGTGGACCTCACTCTGAACTGGGGGCGAATCAGCAATGTG

CTTCCGGAGTATCGGGGAGAGGATGGTGTCAGAGTGGGGAGAATATCCTTTAATAATATA

TCAGCGATACTGGGGACTGTGGCCGTTATACTGAATTGCCATCATCAGGGGGCGCGTTCT

GTTCGCGCCGTGAATGAAGAGAGTCAACCAGAATGTCAGATAACTGGCGACAGGCCCGTT

ATAAAAATAAACAATACATTATGGGAAAGTAATACAGCTGCAGCGTTTCTGAACAGAAAG

TCACAGTTTTTATATACAACGGGTAAATAAAGGAGTTAAGCATGAAGAAGATGTTTATGGChallenge 2.1.2

- Is it a gene?

- If yes, what does it encode?

- What is the closest organism? What can you infer about its taxonomic distribution?

What does a protein-coding gene need to produce proteins?

Now go back to the blast main page.

Challenge 2.1.3

- Did you choose the right blast algorithm to answer the questions above?

- Can you do better?

What if you try another flavor of the blast suite?

What if you aligned the gene to the genome while keeping the codon frame?

Repeat the procedure for this other sequence:

>b

GATTACTTCAGCCAAAAGGAACACCTGTATATGAAGTGTATATTATTTAAATGGGTACTG

TGCCTGTTACTGGGTTTTTCTTCGGTATCCTATTCCCGGGAGTTTACGATAGACTTTTCG

ACCCAACAAAGTTATGTCTCTTCGTTAAATAGTATACGGACAGAGATATCGACCCCTCTT

GAACATATATCTCAGGGGACCACATCGGTGTCTGTTATTAACCACACCCCACCGGGCAGT

TATTTTGCTGTGGATATACGAGGGCTTGATGTCTATCAGGCGCGTTTTGACCATCTTCGT

CTGATTATTGAGCAAAATAATTTATATGTGGCCGGGTTCGTTAATACGGCAACAAATACT

TTCTACCGTTTTTCAGATTTTACACATATATCAGTGCCCGGTGGACAACGGTTTCCATGA

CAACGGACAGCAGTTATACCACTCTGCAACGTGTCGCAGCGCTGGAACGTTCCGGAATGC

AAATCAGTCGTCACTCACTGGTTTCATCATATCTGGCGTTAATGGAGTTCAGTGGTAATA

CAATGACCAGAGATGCATCCAGAGCAGTTCTGCGTTTTGACTGTCACTGTCACAGCAGAA

GCCTTACGCTTCAGGCAGATACAGAGAGAATTTCGTCAGGCACTGTCTGAAACTGCTCCT

GTGTATACGATGACGCCGGGAGACGTGGACCTCTTTTTTTACTCTGAACTGGGGGCGAAT

CAGCAATGTGCTTCCGGAGTATCGGGGAGAGGATGGTGTCAGAGTGGGGAGAATATCCTT

TAATAATATATCAGCGATACTGGGGACTGTGGCCGTTATACTGAATTGCCATCATCAGGG

GGCGCGTTCTGTTCGCGCCGTGAATGAAGAGAGTCAACCAGAATGTCAGATAACTGGCGA

CAGGCCCGTTATAAAAATAAACAATACATTATGGGAAAGTAATACAGCTGCAGCGTTTCT

GAACAGAAAGTCACAGTTTTTATATACAACGGGTAAATAAAGGAGTTAAGCATGAAGAAG

ATGTTTATGGChallenge 2.1.4

How does sequence b compare to the first sequence? Is it

also a gene?

if you get stuck, try e.g. StarORF (http://star.mit.edu/orf/index.html).

Challenge 2.1.5

- Can you perform a pairwise alignment between both sequences?

- What are the differences?

Check the “Align two or more sequences” on the BLAST search page.

Task 2.2: Find protein blast hits

Now find sequences with similarity to this protein

>c

MMNRSIYRQFVRYTIPTVAAMLVNGLYQVVDGIFIGHYVGAEGLAGINVAWPVIGTILGIGMLVGVGTGA

LASIKQGEGHPDSAKRILATGLMSLLLLAPIVAVILWFMADDFLRWQGAEGRVFELGLQYLQVLIVGCLF

TLGSIAMPFLLRNDHSPNLATLLMVIGALTNIALDYLFIAWLQWELTGAAIATTLAQVVVTLLGLAYFFS

ARANMRLTRRCLRLEWHSLPKIFAIGVSSFFMYAYGSTMVALHNTLMIEYGNAVMVGAYAVMGYIVTIYY

LTVEGIANGMQPLASYHFGARNYDHIRKLLRLAMGIAVLGGMAFVLVLNLFPEQAIAIFNSSDSELIAGA

QMGIKLHLFAMYLDGFLVVSAAYYQSTNRGGKAMFITVGNMSIMLPFLFILPQFFGLTGVWIALPISNIV

LSTVVGIMLWRDVNKMGKPTQVSYAChallenge 2.2.1

- What is this gene coding for?

- Where did it likely come from?

Challenge 2.2.2

- Can you align nucleotide sequences (sequence

a, Task 2.1) against a protein database? - If so, how?

Challenge 2.2.3

- Can you align proteins to a nucleotide database?

- If so, how? If not, why not?

Task 2.3: Find gene and genome hits

This task should be performed at the NCBI page (not at EBI).

What is the following sequence?

>d

GTGTCTAAAGAAAAATTTGAACGTACAAAACCGCACGTTAACGTTGGTACTATCGGCCACGTTGACCACG

GTAAAACTACTCTGACCGCTGCAATCACCACCGTACTGGCTAAAACCTACGGCGGTGCTGCTCGTGCATT

CGACCAGATCGATAACGCGCCGGAAGAAAAAGCTCGTGGTATCACCATCAACACTTCTCACGTTGAATAT

GACACCCCGACCCGTCACTACGCACACGTAGACTGCCCGGGGCACGCCGACTATGTTAAAAACATGATCA

CCGGTGCTGCTCAGATGGACGGCGCGATCCTGGTAGTTGCTGCGACTGACGGCCCGATGCCGCAGACTCG

TGAGCACATCCTGCTGGGTCGTCAGGTAGGCGTTCCGTACATCATCGTGTTCCTGAACAAATGCGACATG

GTTGATGACGAAGAGCTGCTGGAACTGGTTGAAATGGAAGTTCGTGAACTTCTGTCTCAGTACGACTTCC

CGGGCGACGACACTCCGATCGTTCGTGGTTCTGCTCTGAAAGCGCTGGAAGGCGACGCAGAGTGGGAAGC

GAAAATCCTGGAACTGGCTGGCTTCCTGGATTCTTACATTCCGGAACCAGAGCGTGCGATTGACAAGCCG

TTCCTGCTGCCGATCGAAGACGTGTTCTCCATCTCCGGTCGTGGTACCGTTGTTACCGGTCGTGTAGAAC

GCGGTATCATCAAAGTTGGTGAAGAAGTTGAAATCGTTGGTATCAAAGAGACTCAGAAGTCTACCTGTAC

TGGCGTTGAAATGTTCCGCAAACTGCTGGACGAAGGCCGTGCTGGTGAGAACGTAGGTGTTCTGCTGCGT

GGTATCAAACGTGAAGAAATCGAACGTGGTCAGGTACTGGCTAAGCCGGGCACCATCAAACCGCACACCA

AGTTCGAATCTGAAGTGTACATTCTGTCCAAAGATGAAGGCGGCCGTCATACTCCGTTCTTCAAAGGCTA

CCGTCCGCAGTTCTACTTCCGTACTACTGACGTGACTGGTACCATCGAACTGCCGGAAGGCGTAGAGATG

GTAATGCCGGGCGACAACATCAAAATGGTTGTTACCCTGATCCACCCGATCGCGATGGACGACGGTCTGC

GTTTCGCAATCCGTGAAGGCGGCCGTACCGTTGGTGCGGGCGTTGTTGCTAAAGTTCTGGGCTAAChallenge 2.3.1

- What organism is it likely from?

- Is it a gene?

- If yes, which one?

- What does it code for?

Use rather blastn to identify the origin of the gene. Explore the alignments of the first hit, and try the “Graphics” button associated with the results.

Challenge 2.3.2

- Where is it located on the genome (precision at ~10kb is good enough)?

- Are there several copies in the genome?

Mark down the name of the gene (generally three letters in lower case and one in uppercase). From the protein record, there is a link to the genome record, which has a “graphics” interface.

Not all genomes are equally well annotated and you might have to search for a genome that is well annotated. One good example is Escherichia fergusonii ATCC 35469

Challenge 2.3.3

- How far is this gene (and possible copies) from the origin of replication?

- How is it oriented with respect to replication?

What genes are reliable markers for the origin of replication? Can you find a paper about that? Now can you find that gene in the genome above?

What is the dnaA gene doing?

Task 2.4 (optional): Explore the synteny functions of the STRING database

We are interested in genes that are normally located next to each other, and their taxonomic breadth.

Open the STRING database (https://string-db.org/). Search for the Shiga toxin-encoding genes in bacteria.

Challenge 2.4.1

- Can you find the Shiga toxin-coding genes in Escherichia coli K-12?

- Do you find the same strain as above?

Challenge 2.4.2

Can you tell on what chromosomal feature these genes are located?

What genes are located next to these genes?

What is a lysogenic phage?

Feel free to explore the different functions of the database. What do the different links in the network mean? What happens if you remove the least reliable interaction sources (i.e. text mining and databases?

Now modify the settings to keep only Neighborhood as edges. Go back to the Viewers tab. Search for the other gene discussed above, tufA, do the same as for stx1, and compare the results.

Challenge 2.4.3

- How many genes are co-localized with stx1?

- What are the functions of these?

Challenge 2.4.4

- How many genes are co-localized with tufA?

- What are the functions of the gene?

- Does the function correlate with the taxonomic breadth of the gene?

For both genes, select the Neighborhood viewer.

Challenge 2.4.5

- How taxonomically widespread are the stx1, respectively tufA genes?

- How conserved is the gene order for these two genes?

- NCBI website is very, very comprehensive

- The Entrez cross-referencing system helps retrieving links bits of data of different type.

Content from BLAST

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How to use the command-line version of

BLAST?

Objectives

- Run different flavors of

BLAST - Use different databases

- Use profile-based

BLASTto retrieve distant homologs

Introduction

The goal of this exercise is to practice with the command line-based

version of BLAST. It is also the first step in building

phylogenetic trees, namely by gathering homologous sequences that will

then be aligned. The alignment is finally used to build a tree.

The whole exercise is based on RpoB, the β-subunit of the bacterial RNA polymerase. This protein is essential to the cell, and present in all bacteria and plastids. It is also very conserved, and thus suitable for deep-scale phylogenies.

BLAST

BLAST (basic local alignment search tool, also referred

to as the Google of biological research) is one of the most widely used

tools in bioinformatics. The wide majority of its uses can be performed

online, but for larger searches, batches or advanced uses, the command

line version, performed locally on a computer or cluster, is

indispensable.

Resources at UPPMAX

BLAST is available at UPPMAX. To load the

blast module, you will need to load the

bioinfo-tools module first. The blast module

also loads the blast_databases module, to be able to use

the standard databases that NCBI maintains.

Databases available at UPPMAX are described here: https://docs.uppmax.uu.se/databases/blast/. The

blast_databases module implies that you don’t need to

specify where the databases are located on the file system. In detail,

it sets the BLASTDB variable to the right folder. You can

see it by typing echo $BLASTDB in the terminal.

Gene and protein records are usually associated with a

taxid, to describe what organisms they come from. This can

be very useful to limit the search to a certain taxon, or to exclude

another taxon. E.g. if you want to investigate whether a certain gene

has been transferred from bacteria to archaea: you would search for that

specific by excluding all bacteria and eukaryotes.

Taxonomy resources at UPPMAX are described here: https://docs.uppmax.uu.se/databases/ncbi/

Exercise 0: Login to Uppmax

This is just a reminder of the introduction to Linux and how to login to Uppmax.

Challenge 0.1

Login to Uppmax and navigate to the course folder, create a folder

for a blast exercise.

Remember the best practices you learned for file naming.

All exercises should be performed inside

/proj/g2020004/nobackup/3MK013/<username> where

<username> is your own folder.

ssh is used to connect to an external server.

-Y forwards the graphical display to your computer. The

address of the server is rackham.uppmax.uu.se. You need to

add your user name in front of it, with a @ in between.

The course folder is at

/proj/g2020004/nobackup/3MK013/.

There, make a folder with your username.

Inside it, create a blast folder for this exercise.

Challenge 0.2

Access an interactive session that is booked for us. The

session is uppmax2025-3-4. Use the snowy

cluster, for 4 hours.

Exercise 1: Finding and retrieving homologs

Here, you will first find the protein query to start your search with. You will test different methods to create a file containing only RpoB from E. coli. Then, you will use that file as a query to find homologs of RpoB in different databases.

Task 1.1: Retrieve the RpoB sequence for E. coli

First, use your recently acquired NCBI-fu to

retrieve the sequence from E. coli K-12. There are many

genomes, and thus many identical proteins, grouped into Identical Protein Groups.

NCBI’s databases are (almost) non-redundant (that’s where the name of

the largest protein database, nr comes from), and thus only

one representative per IPG is present. Thus search in IPG, and find the

IPG’s accession number. Write down the accession number somewhere.

Challenge 1.1.1: Finding E. coli’s RpoB

Find the accession number for the IPG containing the RpoB sequence of

E. coli K-12, and download the sequence in FASTA

format.

The accession number is WP_000263098.1.

The sequence in fasta format can be downloaded at https://www.ncbi.nlm.nih.gov/protein/ by searching the

accession number clicking on the “Send to” link and then choosing

FASTA format. Save the file under

rpoB_ecoli.fasta.

The fasta file should look like:

>WP_000263098.1 MULTISPECIES: DNA-directed RNA polymerase subunit beta [Enterobacteriaceae]

MVYSYTEKKRIRKDFGKRPQVLDVPYLLSIQLDSFQKFIEQDPEGQYGLEAAFRSVFPIQSYSGNSELQY

VSYRLGEPVFDVQECQIRGVTYSAPLRVKLRLVIYEREAPEGTVKDIKEQEVYMGEIPLMTDNGTFVINGTask 1.2: Push the sequence to UPPMAX

The sequence is located on your computer, and you need to transfer it

to your computer. You will test several methods to push it to UPPMAX, to

be able to use it as a query for BLAST.

Challenge 1.2.1: Use scp

scp, secure file copy, is a tool to copy files via SSH,

the same protocol you use to login to UPPMAX. You can use it on your

computer with the following syntax:

The remote file location can be a relative path from your home or an

absolute path, starting with /.

To bring up a local terminal on your Windows computer, click on the

“+” sign on the main window of MobaXTerm. If that doesn’t work, use

SFTP. Click on Session, SFTP, and fill in the Remote host as usual

rackham.uppmax.uu.se and your username. Navigate to

/proj/g2020004/nobackup/3MK013/<username>/blast on

the right panel, then drag and drop files between your computer and

Uppmax.

Use man scp to show the manual for scp.

Challenge 1.2.2: Use copy/paste

A quick and dirty method is to open a graphical text editor on the

remote server and paste the information in it. One such tool is

gedit. Paste the content of the file into

gedit and save it in the appropriate folder, under

rpoB_ecoli.fasta.

Challenge 1.2.3: Extract sequence from database

Since you know the accession number of the sequence to be used as a

query, you can use the blast tools to extract that specific

sequence from one of the databases available at UPPMAX. The tool in

question is blastdbcmd. That specific sequence is

presumably present in multiple databases. In doubt, one could search

nr. But since E. coli K-12 is present in the tiny

landmark

database, you can use that one.

Put the sequence, in FASTA format, into a file called

rpoB_ecoli.fasta

The manual for blastdbcmd can be obtained with:

If you have closed your session, you may need to use

module load to load the appropriate module.

You may want to look into -db and -entry

arguments.

You should use the -db option and the

-entry one.

Challenge 1.2.4 (optional): Size of databases

Can you figure out a way to find the size of these two databases? How does it affect the time to retrieve information from them? Is it worth thinking about it?

You can look at the size of the databases by using

blastdbcmd and the flag -info. To see how much

time it takes to retrieve that single sequence from either database, use

the command time in front of the command:

OUTPUT

Database: Landmark database for SmartBLAST

403,974 sequences; 229,101,880 total residues

Date: Oct 17, 2023 5:37 PM Longest sequence: 35,991 residues

[...]

Database: All non-redundant GenBank CDS translations+PDB+SwissProt+PIR+PRF excluding environmental samples from WGS projects

721,441,320 sequences; 277,730,601,621 total residues

Date: Feb 25, 2024 2:57 AM Longest sequence: 98,182 residues

[...]

real 0m1.369s # for landmark

[...]

real 0m10.059s. # for nr

Task 1.3: Finding homologs to RpoB with BLAST

Now we have a query to start our search. Check that the file is

present (ls) and that it looks like a FASTA

file (less).

OUTPUT

>WP_000263098.1 unnamed protein product [Escherichia coli] >NP_312937.1 RNA polymerase beta subunit [Escherichia coli O157:H7 str. Sakai]

MVYSYTEKKRIRKDFGKRPQVLDVPYLLSIQLDSFQKFIEQDPEGQYGLEAAFRSVFPIQSYSGNSELQYVSYRLGEPVF

[...]The file header might look slightly different, depending on how you

obtained it. Now use blastp to find homologs of the RpoB

protein. Use the help for blastp to explore the options you

need to set.

Challenge 1.3.1: Use blastp to

find homologs in the landmark database

The path to the databases is already set correctly by the

modules load blast command. See echo $BLASTDB

to display it. Put the results into a file called

rpoB_landmark.blast

Explore the results of the blastp command by displaying

the file in which you put the output of blastp. Inspect the

alignments, including those at the bottom of file, which have worse

alignments. In particular look at the E-values: do all hits look like

true homologs? How can you change that?

Challenge 1.3.2: Use blastp to

find better homologs

Find the right option to change the E-value threshold to include hits. What is the default E-value? What does that mean?

Try to rerun the blastp search with a more reasonable

value than the default.

As a rule of thumb, hits that have a E-value < 1e-6 are bona

fide homologs. Look at the -evalue option.

Explore the results again. Is it better, even the last alignments?

The default format of the blast output is

“human-friendly”, something that resembles the output that is created on

the NCBI website. To produce an output that is even closer to NCBI’s

output, use the -html option.

The default format is fine for visual inspection, but not very

convenient for computers to read. For example, in some cases you might

want to perform data analysis on the output, e.g. to sort the results

further or to compare the output of different runs. In that case, a

tabular-like output is to prefer. The main option is

-outfmt, and setting it to 6 will produce such

a tabular output. The columns can be further specified, see the

manual.

Challenge 1.3.3: Explore the output format of

BLAST

Play with the different options for outfmt, the different formats and how to customize the tabular formats (6, 7 and 10).

Finally, produce a standard tabular output for the same run as above

and put the result into a file called rpoB_landmark.tab.

This file can then downloaded to your own computer and opened with

R or with Excel.

Note that the query sometimes yields several hits in the same query protein. This is caused by the protein being long and having its different domains being separated by less conserved domains.

blastp command:

To import the file to your computer, to the current directory, use

the same scp program as above. This should be run

on your own computer, not from UPPMAX.

Task 1.4: Use a larger database

So far you have used the landmark database, which is

tiny. Now, use a different, larger database, to gather more results from

a broader set of bacteria. Run a blastp again. How many

significant hits do you find in these?

Challenge 1.4.1: Larger database

refseq_select_prot and refseq_protein are

good candidates. The former is smaller than the latter. Note that these

two runs will take time, so run only the one to

refseq_select_prot. We will run it in the background

(adding a & at the end of the command) so that you can

continue with other tasks and come back to that one later.

You can check whether blastp is still running by typing

ps:

OUTPUT

PID TTY TIME CMD

13920 pts/64 00:00:00 bash

33117 pts/64 00:00:00 blastp

35427 pts/64 00:00:00 psIf you see blastp there, it means it is still running.

It could take up to 30 minutes. When blastp is done, count

the number of hits obtained.

The list of locally available databases is listed here: https://docs.uppmax.uu.se/databases/blast/

When it has finished (it may take up to 30 minutes), count the rows to have the number of hits:

OUTPUT

500 rpoB_refseq_select.tabYou have actually hit the maximum number of aligned sequences to keep

by default (500). You could change that by using the

-max_target_seqs option and setting it to a larger

number.

Task 1.5: Extract the sequences from the database

You found hits, i.e. proteins that show similarity with the query

protein. To prepare for the next steps (multiple sequence alignment and

building trees), you will need to retrieve the actual proteins and put

them in a FASTA file. The simplest way is to directly

extract them from the database, using the same tool

(blastdbcmd) as above. You will first need to extract a

list of the accession numbers from the blast results.

Challenge 1.5.1: Extract the sequences with

blastdbcmd

Use blastdbcmd to retrieve the accession ids from the

landmark database. To prepare the list of proteins to

extract, cut the blast results to keep the accession number

of the hits. As you noticed above, there are multiple hits per protein

and one hit per line, resulting in each subject protein being possibly

present several times. You will need to produce a non-redundant list of

ids.

The second column of the default tabular output provides the

accession id. cut the tabular output of the blast file,

pipe it to sort and then to uniq, and send the

result in a file. Then use that file as a input to blastcmd

to extract the proteins. Last time we used -entry because

we had just one, but there is another argument that takes a list of

entries instead. Put the result in the rpoB_homologs.fasta

file.

Challenge 1.5.2: Count the sequences

Can you count how many sequences were included in the fasta file? Use

grep and your knowledge of the FASTA

format.

Exercise 2: Using PSI-BLAST to retrieve distant homologs of proteins

In this exercise, you will use another flavor of BLAST

to retrieve distant homologs of a protein. As an example, we will use

the protein RavC, present in the genomes of Legionella

bacteria. This protein is an effector protein, injected by

Legionella into the cytoplasm of their host (protists). The

exact function is unknown, but it is presumably important, as it is

conserved throughout the whole order Legionellales. Many

effectors found in this group are derived from eukaryotic proteins, and

this is what you will test here: does RavC have a homolog in

eukarotes?

The strategy is to use psiblast, which uses - instead of

a single query - a matrix of amino-acid frequencies as a query.

psiblast is an iterative program: you generally start with

one sequence, gather bona fide homologs, use the profile of

these to query the database again, gather new homologs, recalculate the

profile, then re-query the database, etc. The search may converge after

a certain number of iterations, i.e. there no more new homologs to find

with the latest profile.

In this case, you will use a slightly different strategy: you will

start with one sequence, align it to refseq_select_prot but

only to sequences in the order Legionellales, using the

taxids options that limits the taxonomic scope of the

search. You will save the profile (PSSM) generated by the first

psiblast round and reuse this to then query the whole

database, with three iterations.

Task 2.1: Extract RavC from a database

As above, you will extract the sequence of RavC from a database. This

time you will use refseq_select_prot, since there are no

Legionella in landmark. The accession number of

RavC that you will use is WP_010945868.1.

Challenge 2.1.1: Extract RavC

Retrieve sequence WP_010945868.1 from the

refseq_protein database and put it into a file called

ravC_LP.fasta. Check the content of the FASTA

file.

blastdbcmd -help

OUTPUT

>WP_010945868.1 RMD1 family protein [Legionella pneumophila] >ERH46094.1 hypothetical protein N750_05210 [Legionella pneumophila str. Leg01/53] >ERH46577.1 hypothetical protein N751_07400 [Legionella pneumophila str. Leg01/11] >ERI48669.1 hypothetical protein N749_09265 [Legionella pneumophila str. Leg01/20] >MFO2512789.1 RMD1 family protein [Legionella pneumophila serogroup 2] >MFO2594790.1 RMD1 family protein [Legionella pneumophila serogroup 3] >MFO2645706.1 RMD1 family protein [Legionella pneumophila serogroup 8] >MFO2989133.1 RMD1 family protein [Legionella pneumophila serogroup 6] >MFO3234997.1 RMD1 family protein [Legionella pneumophila serogroup 5] >MFO3476243.1 RMD1 family protein [Legionella pneumophila serogroup 7] >MFO8588967.1 RMD1 family protein [Legionella pneumophila serogroup 14] >MFO8774718.1 RMD1 family protein [Legionella pneumophila serogroup 10] >MFP3789783.1 RMD1 family protein [Legionella pneumophila serogroup 9]

MECLSFCVAKTIDLTRLDLHLKNVSKEFSAVKTRDVIRLNSHRNKDHTLFIFKNGTVVSWGVKRYQIHEYLDIIKLLVDK

PVALLVHDEFHYQIGDKTAIEPHGFYDVDCLTIEEDSDELKLSLSYGFSQSVKLQYFETIIDALIEKYNPLIQALSHKGE

MPISRKQIQQVIGEILGAKSELNLISNFLYHPKYFWQHPTLEEHFSMLERYLHIQRRVNAINHRLDTLNEIFDMFNGYLE

SRHGHHLEIIIIVLIAVEIIIAVMNFHFTask 2.2: Align RavC to sequences belonging to Legionellales

That task is a bit complex, so let’s break it down in several steps. You will:

- align the RavC sequence to the

refseq_select_protdatabase, usingpsiblast, and put the result into the fileravC_Leg.psiblast. - save the PSSM (the amino-acid profile built by psiblast) after the last round, both in its “native” form and in text format.

- filter hits so that only hits with E-value < 1e-6 are shown

- filter hits so that only hits with E-value < 1e-10 are included in the PSSM

- filter hits so that only hits belonging to the order Legionelalles are included

Challenge 2.2.1: Psiblast

Build the command, using the psiblast command, the query

you extracted above and the refseq_select_prot

database.

Don’t run the command yet, as this will run for a while! We are just building the command now. Wait for the end of the section.

Challenge 2.2.2: Saving the PSSM

Now add the options to save the PSSM after the last round, and save the PSSM both as binary and ascii form. Add these options to the command above, but you will only run it when it is finished.

Challenge 2.2.3: Thresholds

Now add the E-value thresholds. You want to display hits with E-value < 1e-6, but only include those with E-value < 1e-10. Add the options to the rest.

Challenge 2.2.4: Taxonomic range

Now limit the results to the order Legionellales. Find the taxid of this group on the NCBI website.

Note: this feature has been upgraded in the latest version of

BLAST (2.15.0+). It is now possible to include all

descendants of a single taxid, using only that taxid. In previous

versions of BLAST, you had to include all descending taxids

using a script.

Check out the Taxonomy section of NCBI: https://www.ncbi.nlm.nih.gov/Taxonomy/

Challenge 2.2.5: Running the command and examining the results

Run now the full command as above. When it’s done, look at the

resulting files. There should be three of them. Take some time to

explore these three files, especially the alignments resulting from the

psiblast.

Task 2.3: Align the PSSM to the whole database

You will now take the resulting PSSM and use that as a query to

perform a psiblast against the whole

refseq_select_prot database (not only against

Legionellales). You want to perform max 10 iterations (the

search will stop if it converges before the tenth iteration), and

increase the max number of target sequences to gather to 1000 per

iteration. You also want to change the output to a tabular form with

comments and add more columns to get into more details.

Challenge 2.3.1: Align the PSSM, set E-value thresholds, iteration and max sequences

Build the command as above, but don’t set the -query

option, use the option that allows to input a PSSM instead. Set the

E-value thresholds as above, the number of iterations to 10 and the

maximum number of sequences to gather to 1000 (per round). Direct the

result to the file ravC_all.psiblast.

Don’t run it yet, more options to come.

Challenge 2.3.2: Set the output format

You want to have a tabular result format with comments, to help you understand the output. You also want to use the standard columns but add the query coverage per subject, the scientific and common name of the subject sequence, as well as which super-kingdom (or domain) it belongs to.

Inspect the “Formatting options” section of the psiblast help page.

The correct -outfmt is 7. The string with the correct

columns is composed with this number and then column names, separated by

spaces, the whole enclosed by double quotes. An example in the help file

of psiblast is "10 delim=@ qacc sacc score"

psiblast -in_pssm

Challenge 2.3.3: Run the command and examine the results

This will take a while to run, maybe 30 minutes. When done, open the result file and examine it. Did you find hits in the eukaryotes?

- Using BLAST is a blast!

- Choice of database is a crucial trade-off between efficiency and sensitivity

Content from Multiple sequence alignment

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How do you align multiple sequences?

- Why is it important to properly align sequences?

Objectives

- Use

mafftto align multiple sequences. - Test different algorithms.

Introduction

In this episode, we are exploring multiple sequence alignment (MSA).

In the first task, you are going to use mafft

to align homologs of RpoB, the β subunit of the

bacterial RNA polymerase. It is a long, multi-domain protein, suitable

for showing issues related to MSA.

In the second task, you will trim that alignment to remove poorly aligned regions.

But first, go to your own folder and create a phylogenetics subfolder. You will use the alignments for other tutorials as well.

Task 1: Use different flavors of MAFFT and compare the

results

Start by finding the data, which is a fasta file containing 19 sequences of RpoB,

gathered by aligning RpoB from E. coli to a very small database

present at NCBI, the landmark

database. These sequences are a subset of the sequences gathered in the

previous exercise on BLAST.

The file is available in

/proj/g2020004/nobackup/3MK013/data/.

Challenge 1.1: prepare the terrain

The course base folder is at

/proj/g2020004/nobackup/3MK013. Go to your own folder,

create a phylogenetics subfolder, and move into it. Also,

start the interactive session, for 4 hours. The session

name is uppmax2025-3-4 and the cluster is

snowy.

Challenge 1.2: copy the file

Copy the file to your newly created phylogenetics

folder. Use a relative path.

Use the ../../ to see what’s two folders up, and then

data/rpoB/rpoB.fasta

Challenge 1.1: prepare the terrain (continued)

Renaming sequences

Look at the accession ids of the fasta sequences: they are not very informative.

OUTPUT

>WP_000263098.1 MULTISPECIES: DNA-directed RNA polymerase subunit beta [Enterobacteriaceae]

>WP_011070610.1 DNA-directed RNA polymerase subunit beta [Shewanella oneidensis]

>WP_003114335.1 DNA-directed RNA polymerase subunit beta [Pseudomonas aeruginosa]

>WP_002228870.1 DNA-directed RNA polymerase subunit beta [Neisseria meningitidis]

>WP_003436174.1 MULTISPECIES: DNA-directed RNA polymerase subunit beta [Eubacteriales]Keep the taxonomic name following the accession id, separated by

_, using a bit of sed magic. Save the

resulting file for later use, and show the headers again.

BASH

sed "/^>/ s/>\([^ ]*\) \([^\[]*\)\[\(.*\)\]/>\\3_\\1/" rpoB.fasta | sed "s/ /_/g" > rpoB_renamed.fasta

grep '>' rpoB_renamed.fasta | head -n 5Nerd alert: dissecting the sed

magic

This is optional reading.

The sed

command matches (and possibly substitutes) strings (chains of

characters). In that case, the goal is to simplify the header by putting

the taxonomic group first but keeping it informative enough by adding

the accession number. The strategy is to match what is between the

> and the first space, then what is between square

parentheses, and put them back, separated by an underscore.

The sed command first matches only lines that start with

a > (/^>/). It then substitutes (general

pattern s/<something>/<something else>/) a text

with another one. The first part is to match the accession id, between

(escaped) square brackets, which comes after the > at

the beginning of the line. This is expressed as

>\([^ ]*\): match any number of non-space characters

([^ ]*) and put it in memory (what is between

\( and \)). Then, the description is matched

by \([^\[]*\), any number of characters that are not an

opening bracket [, and put into memory. Finally, the

taxonomic description is matched: \[\(.*\)\], that is, any

number of characters between square brackets is stored into memory. The

whole line is then replaced with a >, the third match

into memory, followed by an _ and the content of the first

match into memory >\\3_\\1. Then, all the input is

passed through sed again, to replace any space with an underscore:

s/ /_/g and the output is stored in a different file.

OUTPUT

>Enterobacteriaceae_WP_000263098.1

>Shewanella_oneidensis_WP_011070610.1

>Pseudomonas_aeruginosa_WP_003114335.1

>Neisseria_meningitidis_WP_002228870.1

>Eubacteriales_WP_003436174.1It looks better.

Alignment with progressive algorithm

Use mafft with a progressive algorithm to align the

sequences.

Challenge 1.3: Run mafft with

progressive algorithm

Use the FFT-NS-2 algorithm from mafft to align the

renamed sequences. Also, record the time it takes for mafft

to complete the task.

Use the module command to load

bioinfo-tools and MAFFT. Use time

to record the time.

The help obtained through mafft -h is not very

informative about algorithms, so check the mafft

webpage.

mafft actually has one executable program for each

algorithm, all starting with mafft-. A way to display them

all is to type that and push the Tab key twice to see all

possibilities.

BASH

module load <general module> <mafft module>

time mafft-<algorithm> <fasta_file> > rpoB.fftns.alnOUTPUT

...

[...]

Strategy:

FFT-NS-2 (Fast but rough)

Progressive method (guide trees were built 2 times.)

If unsure which option to use, try 'mafft --auto input > output'.

For more information, see 'mafft --help', 'mafft --man' and the mafft page.

The default gap scoring scheme has been changed in version 7.110 (2013 Oct).

It tends to insert more gaps into gap-rich regions than previous versions.

To disable this change, add the --leavegappyregion option.

real 0m1.125s

user 0m0.818s

sys 0m0.181sThe last line is the output of the time command. It took

1.125 seconds to complete this time.

Type mafft and try tab-complete to see all versions of

mafft.

Try the command time

Alignment with iterative algorithm

Now use one of the supposedly better iterative algorithm of

mafft to align the same sequences. Choose the E-INS-i

algorithm which is suited for proteins that have highly conserved

motifs interspersed with less conserved ones.

Take a few minutes to read upon the different alignment strategies on the page above.

Challenge 1.4

Use the superior E-INS-i algorithm from mafft to align the renamed

sequences. Also, record the time it takes for mafft to

complete the task.

OUTPUT

[...]

Strategy:

E-INS-i (Suitable for sequences with long unalignable regions, very slow)

Iterative refinement method (<16) with LOCAL pairwise alignment with generalized affine gap costs (Altschul 1998)

If unsure which option to use, try 'mafft --auto input > output'.

For more information, see 'mafft --help', 'mafft --man' and the mafft page.

The default gap scoring scheme has been changed in version 7.110 (2013 Oct).

It tends to insert more gaps into gap-rich regions than previous versions.

To disable this change, add the --leavegappyregion option.

Parameters for the E-INS-i option have been changed in version 7.243 (2015 Jun).

To switch to the old parameters, use --oldgenafpair, instead of --genafpair.

real 0m7.367s

user 0m7.022s

sys 0m0.244sIt now took 7.36 seconds to complete this time, i.e. 6 times more than with the progressive algorithm. It doesn’t make a big difference now, but with hundreds of sequences it will make one.

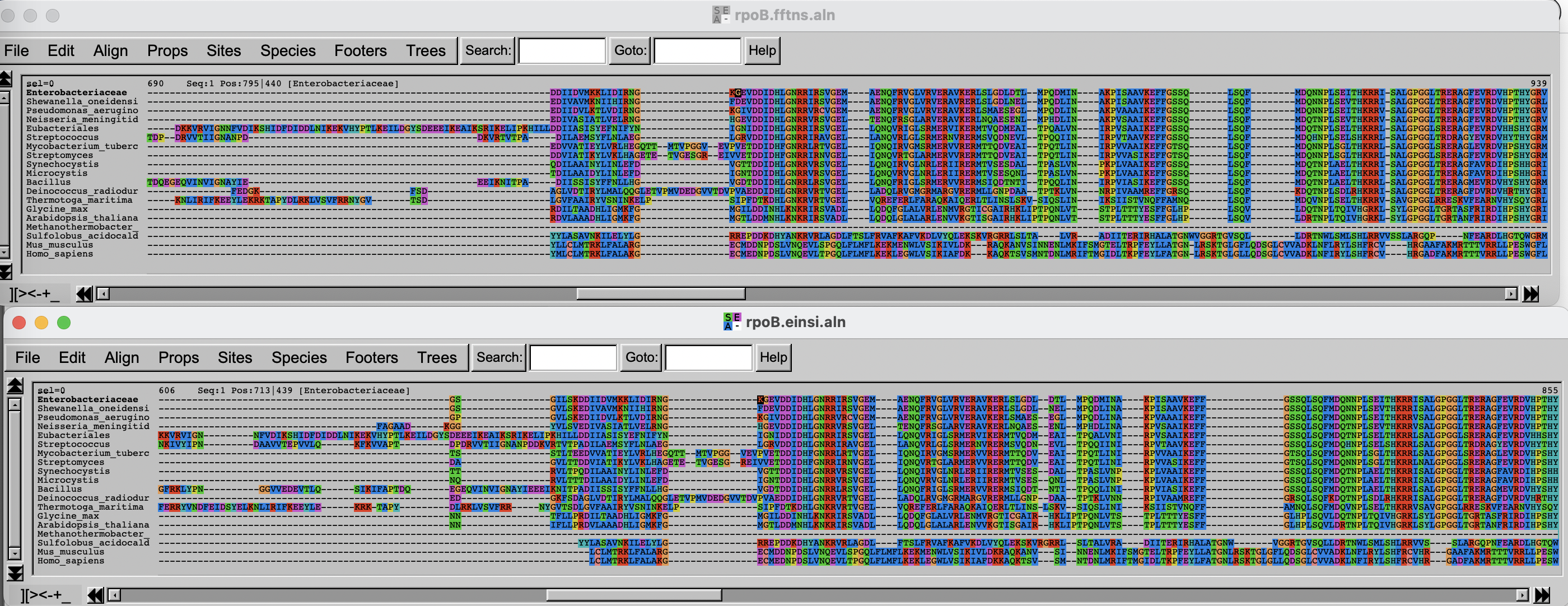

Alignment visualization

You will now inspect the two resulting alignment methods. There are

no convenient way to do that from Uppmax, and the easiest solution is to

download the alignments on your computer and to use

either seaview

(you will need to install it on your computer) or an online alignment

viewer like AlignmentViewer.

Arrange the two windows on top of each other. Change the fontsize (Props -> Fontsize in Seaview) to 8 to see a larger portion of the alignment.

Challenge

Can you spot differences? Which alignment is longer?

Hint: try to scroll to position 800-900. What do you see there? How are the blocks arranged?

If you have time over, spend it exploring the different options of Seaview/AlignmentViewer.

Task 2: Trim the alignment

Often, some regions of the alignment don’t align properly, either because they contain low complexity segments (hinges in proteins) or evolved through repeated insertions/deletions, which alignment program cannot handle properly. It is thus good practice to remove (trim) these regions, as they are likely to worsen the quality of the subsequent phylogenetic trees. On the other hand, trimming too much of the alignment removes also potentially valuable information. There is thus a balance to be found.

In this part, you will use two different alignment trimmers, TrimAl and ClipKIT, on the

results of mafft’s E-INS-i algorithm.

Trimming with TrimAl

First, load the module and look at the list of options available with

trimAl.

trimAl offers a lot of different options. You are going

to explore two different: gap threshold (-gt) and the

heuristic method -automated1, which automatically decides

between three automated methods, -gappyout,

-strict and -strictplus, based on the

properties of the alignment. The gap threshold methods removes columns

that contain a fraction of sequences lower than the cut-off.

For comparison purposes, you will be adding an html output.

Challenge 2.1: TrimAl with gap threshold

Use trimAl to remove positions in the alignment that

have more than 40% gaps.

The “gap threshold” is actually expressed as fraction of non-gap residues.

Challenge 2.2: TrimAl with automated trimming

Use trimAl with the automated heuristic algorithm.

Add a -automated1 option and rerun as above, choosing a

different output file.

Challenge 2.3: Compare the results

Get the files (both alignments and html files) to your own laptop and

visualize the results, by opening the html files with your

browser and the alignment files with seaview or the viewer

you used above.

On your own laptop, go inside the folder where you want to import the

files. Use scp. On some OS it is necessary to escape the

wildcard *. If the output says something about

no matches found, try that.

As before, if you do not have access to a terminal on your windows laptop, use MobaXterm and Session > SFTP to copy files to your computer.

Trimming with ClipKIT

ClipKIT is

one of the more recent tools to trim multiple sequence alignments. In a

nutshell, it tries to preseve phylogenetically-informative sites, rather

than trimming gappy regions. Although it also has multiple options and

modes, you will only use the default mode, smart-gap.

OUTPUT

---------------------

| Output Statistics |

---------------------

Original length: 2043

Number of sites kept: 1543

Number of sites trimmed: 500

Percentage of alignment trimmed: 24.474%

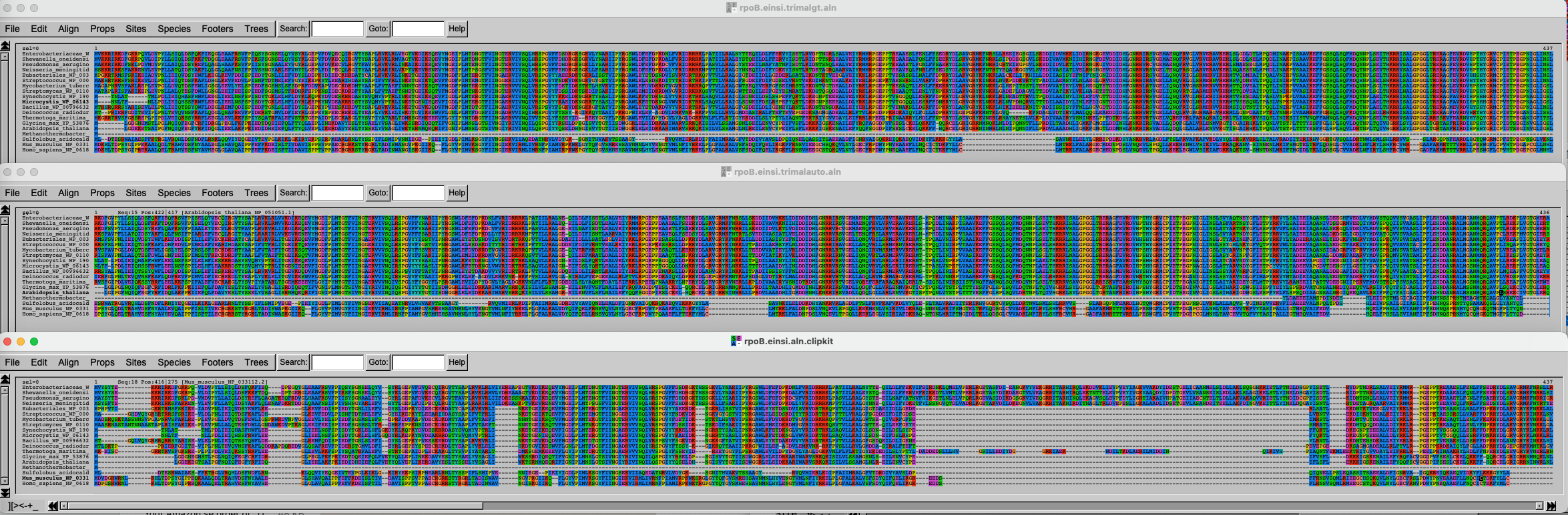

Execution time: 0.379sComparing all the results

Import the data and inspect the three alignments.

Challenge 2.5: Import data and compare results

Copy the alignment file and visualize with seaview or

the web-based visualization tool.

Use scp as above.

Then use seaview or another viewer to visualize and

compare results.

The three alignments on top of each other look like this. Click on this link to better see the figure.

It is of course difficult to draw conclusions based on this figure, but can you spot some trends? What alignment is more likely to generate good results?

Content from Phylogenetics

Last updated on 2026-02-10 | Edit this page

Overview

Questions

- How do you build a simple, distance-based phylogenetic tree?

- How do you build a phylogenetic tree with more advanced methods?

- How do you ascertain statistical support for phylogenetic trees?

Objectives

- Learn the basic of phylogenetics tree building, taking the simplest of the examples with a UPGMA tree.

- Learn how to use phylogenetics algorithms, neighbor-joining and maximum-likelihood.

- Learn how to perform and show bootstraps.

Exercise 1: Paper-and-pen phylogenetic tree

Setup

The exercise is done for a large part with pen and paper, and then a

demonstration in R on your laptop, using RStudio. We’ll also use the R

package ape, which

you should install if it’s not present on your setup. Commands can be

typed or pasted in the “Console” part of RStudio.

R

install.packages('ape')

OUTPUT

The following package(s) will be installed:

- ape [5.8-1]

These packages will be installed into "~/work/course-microbial-genomics/course-microbial-genomics/renv/profiles/lesson-requirements/renv/library/linux-ubuntu-jammy/R-4.5/x86_64-pc-linux-gnu".

# Installing packages --------------------------------------------------------

- Installing ape 5.8-1 ... OK [linked from cache]

Successfully installed 1 package in 5.4 milliseconds.And to load it:

R

library(ape)

UPGMA-based tree

Load the tree in fasta format, reading from a temp

file

R

FASTAfile <- tempfile("aln", fileext = ".fasta")

cat(">A", "----ATCCGCTGATCGGCTG----",

">B", "GCTGATCCGTTGATCGG-------",

">C", "----ATCTGCTCATCGGCT-----",

">D", "----ATTCGCTGAACTGCTGGCTG",

file = FASTAfile, sep = "\n")

aln <- read.dna(FASTAfile, format = "fasta")

Now look at the alignment. Notice there are gaps, which we don’t want in this example. We also remove the non-informative (identical) columns.

R

alview(aln)

OUTPUT

000000000111111111122222

123456789012345678901234

A ----ATCCGCTGATCGGCTG----

B GCTG.....T.......---....

C .......T...C.......-....

D ......T......A.T....GCTGR

aln_filtered <- aln[,c(7,8,10,12,14,16)]

alview(aln_filtered)

OUTPUT

123456

A CCCGTG

B ..T...

C .T.C..

D T...ATNow we have a simple alignment as in the lecture. Dots

(.) mean that the sequence is identical to the top row,

which makes it easier to calculate distances.

Calculating distance “by hand”

Let’s use a matrix to calculate distances between sequences. We’ll just count the number of differences between the sequences. We’ll then group the two closest sequences. Which are they?

| A | B | C | D | |

|---|---|---|---|---|

| A | ||||

| B | ||||

| C | ||||

| D |

Here is the solution:

| A | B | C | D | |

|---|---|---|---|---|

| A | ||||

| B | 1 | |||

| C | 2 | 3 | ||

| D | 3 | 4 | 5 |

The two closest sequences are A and B.

Calculating distance “by hand” (continued)

Let’s now cluster together A and B, and calculate the average distance from AB to the other sequences, weighted by the size of the clusters.

| AB | C | D | |

|---|---|---|---|

| AB | |||

| C | |||

| D |

The average distance for AB to C is calculated as follow:

\(d(AB,C) = \dfrac{d(A,C) + d(B,C)}{2} = \dfrac{2 + 3}{2} = 2.5\)

And so on for the other distances:

| AB | C | D | |

|---|---|---|---|

| AB | |||

| C | 2.5 | ||

| D | 3.5 | 5 |

Calculating distance “by hand” (continued)

Now the shortest distance is AB to C. Let’s recalculate the distance to D again.

| ABC | D | |

|---|---|---|

| ABC | ||

| D |

\(d(ABC,D) = \dfrac{d(AB,D) * 2 + d(C,D) * 1}{2 + 1} = \dfrac{3.5 * 2 + 5 * 1}{3} = 4\)

| ABC | D | |

|---|---|---|

| ABC | ||

| D | 4 |

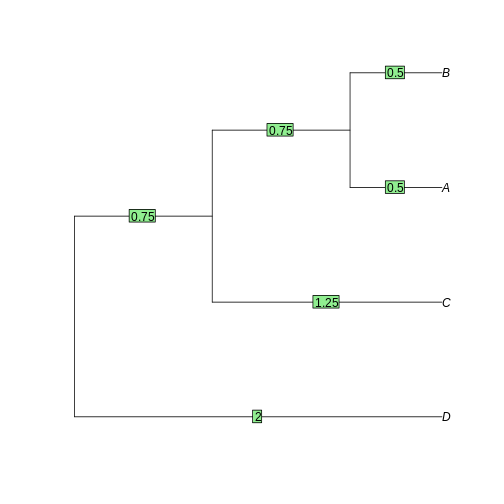

The tree is reconstructed by dividing the distances equally between the two leaves. - A-B: each 0.5. - AB-C: each side gets 2.5/2 = 1.25. The branch to AB is 1.25 - 0.5 = 0.75 - ABC-D: each side gets 4/2 = 2. The branch to ABC is 2 - 0.75 - 0.5 = 0.75

Let’s know do the same using bioinformatics tools.

Challenge

We’ll use dist.dna to calculate the distances. We’ll use

a “N” model, that just counts the differences and doesn’t correct or

normalizes. We’ll use the function hclust to perform the

UPGMA method calculation. The tree is then plotted, and the branch

lengths plotted with edgelabels:

R

dist_matrix <- dist.dna(aln_filtered, model="N")

dist_matrix

OUTPUT

A B C

B 1

C 2 3

D 3 4 5R

tree <- as.phylo(hclust(dist_matrix, "average"))

plot(tree)

edgelabels(tree$edge.length)

Exercise 2: Neighbor-joining and Maximum-likelihood tree

Introduction

In the previous episode, we inferred a simple phylogenetic tree using UPGMA, without correcting the distance matrix for multiple substitutions. UPGMA has many shortcomings, and gets worse as distance increases. Here we’ll test Neighbor-Joining, which is also a distance-based, and a maximum-likelihood based approach with IQ-TREE.

Neighbor-joining

The principle of Neighbor-joining method (NJ) is to start from a star-like tree, find the two branches that, if joined, minimize the total tree length, join then, and repeat with the joined branches and the rest of the branches.

Challenge

To perform NJ on our sequences, we’ll use the function inbuilt in

Seaview. First (re-)open the alignment we obtained from

mafft with the E-INS-i method, trimmed with ClipKIT. If it

is not on your computer anymore, transfer it again from Uppmax using

scp or SFTP.

If Seaview is not installed on your computer, download it and install it on your computer.

Challenge (continued)

In the Seaview window, select Trees -> Distance methods. Keep the BioNJ option ticked. BioNJ is an updated version of NJ, more accurate for longer distances. Tick the “Bootstrap” option and leave it at 100. We’ll discuss these later. Then click on “Go”.

What do you see? Is the tree rooted? Is the pattern species coherent with what you know of the tree of life? Any weird results?

Challenge

Redo the BioNJ tree for the other alignment we inferred.

Do you see any differences between the two trees? What can you make out of it?

Maximum likelihood

We will now use IQ-TREE to infer a maximum-likelihood (ML) tree of

the RpoB dataset we aligned with mafft previously. While

you performed the first trees on your laptop, you will infer the ML tree

on Uppmax. As usual, use the interactive command to gain

access to compute time. Go to the phylogenetics folder

created in the MSA episode, and load the right modules.

Challenge

Get to the right folder, require compute time and load the right

modules. The project is uppmax2025-3-4. The module

containing IQ tree is called iqtree.

Loading the iqtree module resulted in:

OUTPUT

iqtree/2.2.2.6-omp-mpi: executables are prefixed with 'iqtree2'. Additional modules are required for the multi-node MPI version. See 'module help iqtree/2.2.2.6-omp-mpi'.You won’t be using the multi-node MPI version, so you don’t need to

load additional modules. But you will need to use iqtree2

instead of the “normal” iqtree.

Then run your first tree, on the ClipKIT-trimmed

alignment.

Here, we tell IQ-TREE to use the alignment

-s rpoB.einsi.clipkit.aln, and to test among the standard

substitution models which one fits best (-m MFP). We also

tell IQ-TREE to perform 1000 ultra-fast bootstraps

(-B 1000). We’ll discuss these later.

IQ-TREE is a very complete program that can do a large variety of phylogenetic analysis. To get a flavor of what it’s capable of, look at its extensive documentation.

Have a look at the output files:

OUTPUT

rpoB.einsi.clipkit.aln.bionj rpoB.einsi.clipkit.aln.iqtree rpoB.einsi.clipkit.aln.model.gz

rpoB.einsi.clipkit.aln.ckp.gz rpoB.einsi.clipkit.aln.log rpoB.einsi.clipkit.aln.splits.nex

rpoB.einsi.clipkit.aln.contree rpoB.einsi.clipkit.aln.mldist rpoB.einsi.clipkit.aln.treefileThere are two important files:

* *.iqtree file provides a text summary of the analysis. *

*.treefile is the resulting tree in Newick

format.

To visualize the tree, open the Beta version of phylo.io.

Click on “add a tree” and copy/paste the content of the

treefile (use e.g. less or cat to

display it) in the box. Make sure the “Newick format” is selected and

click on “Done”.

The tree appears as unrooted. It is good practice to start by ordering the nodes and root it. There is no good way to automatically order the branches in phylo.io as of yet, but rerooting can be done by clicking on a branch and selecting “Reroot”. Reroot the tree between archaea and bacteria. Now the tree makes a bit more sense.

Scrutinize the tree. Is it different from the BioNJ tree generated in Seaview? How?

Challenge

Redo a ML tree from the other alignment (FFT-NS) we inferred with

mafft and display the resulting tree in FigTree.

BASH

iqtree2 -s <alignment file> <option and text for testing model> <option and test for 1000 fast bootstraps> Import the tree on your computer and load it with FigTree or with phylo.io

Bootstraps

We have inferred four trees: * Two based on the alignment generated from the E-INS-i algorithm (trimmed by ClipKIT), two from the FFT-NS. * Two inferred with the BioNJ algorithm and two with the ML algorithm (IQ-Tree)

Along the way, we’ve generated bootstraps for all our trees. Now show them on all four trees. * For the Seaview trees, tick the ‘Br support’ box * For the trees shown in phylo.io, click on “Settings” > “Branch & Labels” and above the branch, click the drop-down menu and select “Data”.

Challenge

Compare all four trees. Do you find any significant differences?

What are Glycine and Arabidopsis? What about Synechocystis and Microcystis?

Search for the accession number of the Glycine RpoB sequence on NCBI. Any hint there?

The two plant RpoB sequences are from chloroplastic genomes, and thus branch together with the cyanobacterial sequences, in some of the phylogenies at least.

Challenge (continued)

What about the support values for grouping these two groups? How high are they?

- The simplest of the trees are distance-based.

- UPGMA works by clustering the two closest leaves and recalculating the distance matrix.

- Neighbor-joining is distance-based and fast, but not necessarily very accurate

- Maximum-likelihood is slower, but more accurate

Exercise 3: Genetic drift

As a practical way to understand genetic drift, let’s play with population size, selection coefficients, number of generations, etc.

Overview

Questions

- How does random genetic drift influence fixation of alleles?

- What is the influence of population size?

Objectives

- Learn the basic of phylogenetics tree building, taking the simplest of the examples with a UPGMA tree.

- Learn how to use phylogenetics algorithms, neighbor-joining and maximum-likelihood.

- Learn how to perform and show bootstraps.

Overview

Questions

- How does random genetic drift influence fixation of alleles?

- What is the influence of population size?

Objectives

- Understand how population size influences the probability of fixation of an allele.

- Understand how slightly deleterious mutations may get fixed in small populations.

Introduction

This exercise is to illustrate the concepts of selection, population size and genetic drift, using simulations. We will use mostly Red Lynx by Reed A. Cartwright.

Another option is to use a web interface to the R learnPopGen package, but the last one is mostly for biallelic genes (and thus not that relevant for bacteria).

Open now the Red Lynx website and get familiar with the different options.

You won’t need the right panel (but feel free to explore). The dominance option in the left panel won’t be used either.

Genetic Drift without selection

In the first part, you will only play with the number of generations, the initial frequency and the population size.

- Lower the number of generations to 200.

- Adjust the population size to 1000.

- Make sure the intial frequency is set to 50%.

- Run a few simulations (ca 20).

Did any allele got fixed? What is the range of frequencies after 200 generations?

In my simulations, no allele got fixed, the final allele frequencies range20-80%

Genetic Drift without selection (continued)

Now increase the population to 100’000, clear the graph, and repeat the simulations. What’s the range of final frequencies now?

In my simulations, no allele got fixed, and the final allele frequencies range 45-55%

Genetic Drift without selection (continued)

Now decrease the population to 10 individuals, clear the graph and repeat these simulations. What’s the range of final frequencies now?

In my simulations, one allele got fixed quickly, the latest one was at generation 100.

Genetic Drift without selection (continued)

What do you conclude here?

It is clear that stochastic (random) variation in allele frequencies strongly affects the probability of fixation of alleles in small populations, not so much in large ones.

Genetic Drift with selection

So far we’ve only looked at how allele frequencies vary in the absence of selection, that is when the two alleles provide an equal fitness. What’s the influence of random genetic drift when alleles are not neutral?

The selection strength is equivalent to the selection coefficient, i.e. how much higher relative fitness the new allele provides. A selection coefficient of 0.01 means that the organism harboring the new allele has a 1% increased fitness.

- Increase the number of generations to 1000.

- Set the selection strength to 0.01 (1%).

- Set the population size first to 100’000, then to 1000, then to 100.

How long does it take for the allele to get fixed - in average - with the three population sizes?

About the same time, but the trajectories are much smoother with larger populations. In the small population, it happens that the beneficial allele disappears from the population (although not often).

Fixation of slightly deleterious alleles in very small populations

We’re now simulating what would happen in a very small population (or a population that undergoes very narrow bottlenecks), when a gene mutates. We’ll have a very small population (10 individuals), a selection value of -0.01 (the mutated allele provides a 1% lower fitness), and a 10% initial frequency, which corresponds to one individual getting a mutation:

- Set the population to 10 individuals.

- Set generations to 200.

- Set initial frequency to 10%.

- Set the selection strength to -0.01.

Run many simulations. What happens?

Most new alleles go extinct quickly. In my simulations, I usually get one of the slightly deleterious mutations fixed after 20 simulations.

That’s it for that part, but feel free to continue playing with the different settings later.

- Random genetic drift has a large influence on the probability of fixation of alleles in small populations, even for non-neutral alleles.

- Random genetic drift has very little influence of the probability of fixation of alleles in large populations.

- Slightly deleterious mutations can get fixed into the population through random genetic drift, if the population is small enough and the selective value is not too large.

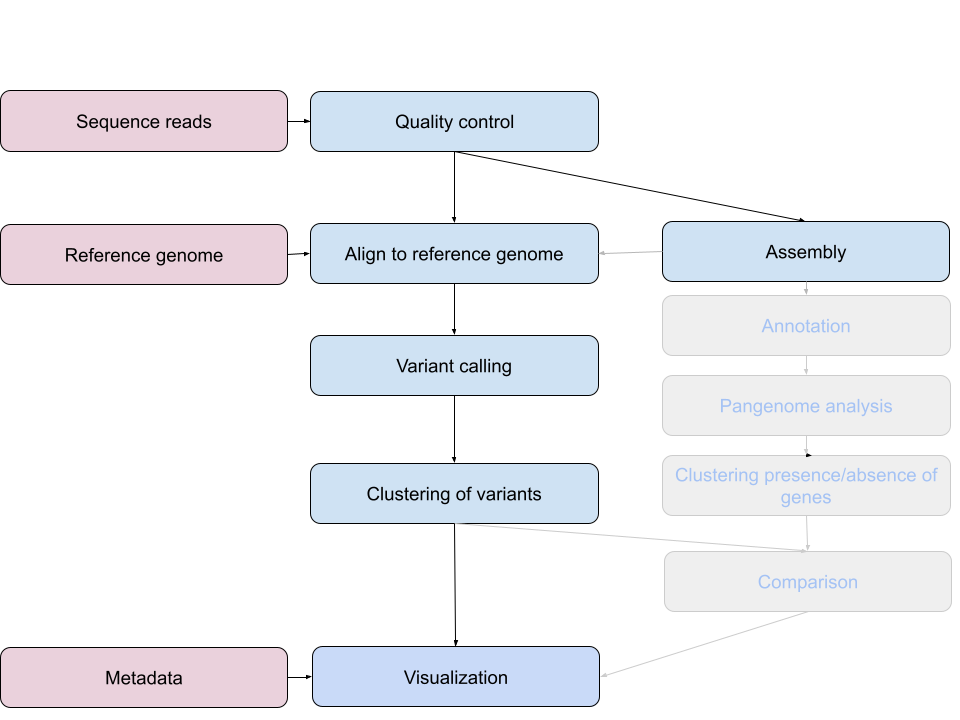

Content from Reads, QC and trimming

Last updated on 2026-02-10 | Edit this page

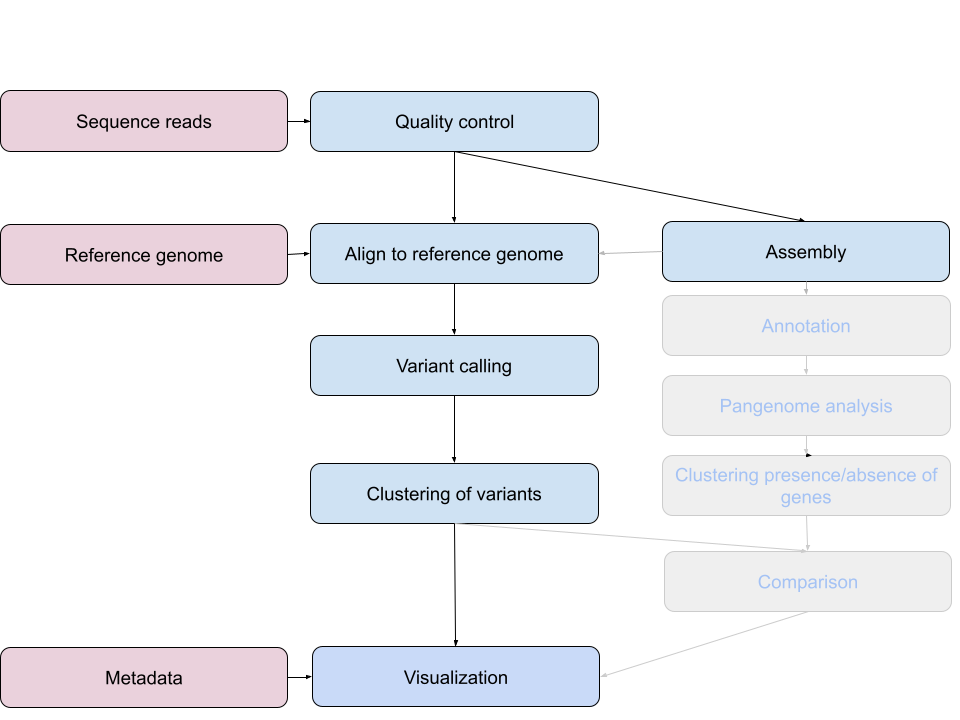

This episode starts a series of labs where you will trace an outbreak of Mycobacterium tuberculosis, from downloading the reads to making assumptions about the transmissions between patients.

The first part, here, describes how to retrieve all necessary data from public repositories - the raw sequenced data of our isolates and a reference genome. It also introduces for loops which we will use for the rest of our analysis.

Overview

Questions

- Trace an outbreak of Mycobacterium, from reads to phylogenetic tree.

- How can I organize my file system for a new bioinformatics project?

- How and where can data be downloaded?

- How can I describe the quality of my data?

- How can I get rid of sequence data that doesn’t meet my quality standards?

Objectives

- How do you use genomics and phylogenetics to trace a microbial outbreak?

- Create a file system for a bioinformatics project.

- Download files necessary for further analysis.

- Use ‘for’ loops to automate operations on multiple files

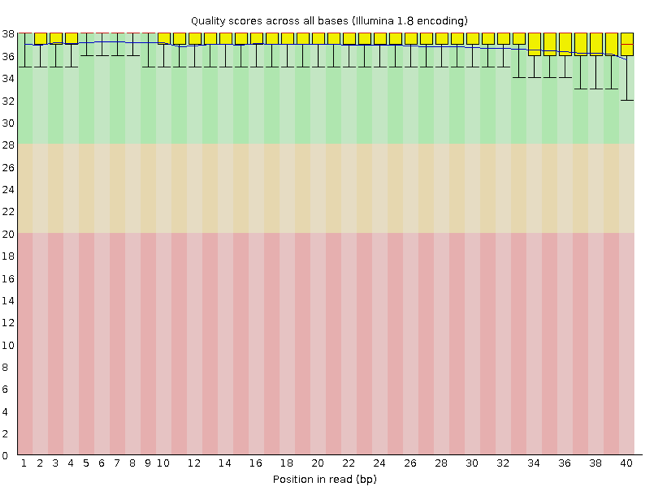

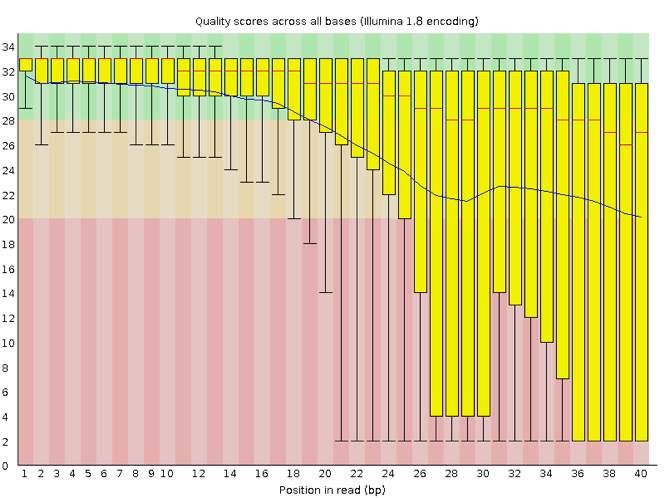

- Interpret a FastQC plot summarizing per-base quality across all reads.

- Clean FastQC reads for further analysis.

- Use

forloops to automate operations on multiple files.

Introduction

This part of the course is largely inspired from another tutorial developed by Anita Schürch. We will use (part of) the data described in Bryant et al., 2013.

Background of the data

Epidemiological contact tracing through interviewing of patients can identify potential chains of patients that transmitted an infectious disease to each other. Contact tracing was performed following the stone-in-the-pond principle, which interviews and tests potential contacts in concentric circles around a potential bash case.

Tuberculosis (TB) is an infectious disease caused by Mycobacterium tuberculosis. It mostly affects the lungs. An infection with M. tuberculosis is often asymptomatic (latent infection). Only in about 10% of the cases the latent infection progresses to an active infection during a patients lifetime, which, if untreated, leads to death in about half of the cases. The symptoms of an active TB infection include cough, fever, night sweats, weight loss etc. An active TB infection can spread. Once exposed, people often have latent TB. To identify people with latent TB, a skin test can be applied.

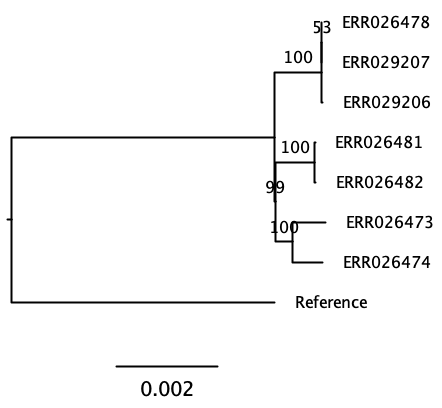

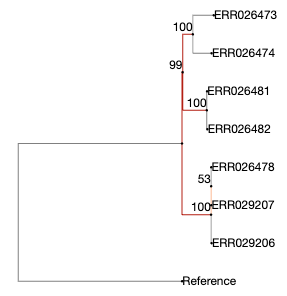

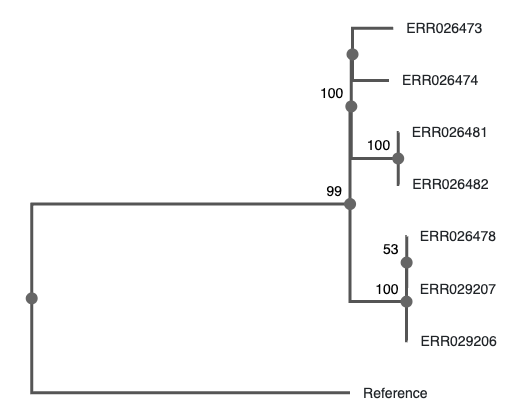

Here we have 7 tuberculosis patients with active TB, that form three separate clusters of potential transmission as determined by epidemiological interviews. Patients were asked if they have been in direct contact with each other, or if they visited the same localities. From all patients, a bacterial isolate was grown, DNA isolated, and whole-genome sequenced on an Illumina sequencer.

The three clusters consist of:

- Cluster 1

- Patient A1 (2011) - sample ERR029207

- Patient A2 (2011) - sample ERR029206

- Patient A3 (2008) - sample ERR026478

- Cluster 2

- Patient B1 (2001) - sample ERR026474

- Patient B2 (2012) - sample ERR026473

- Cluster 3

- Patient C1 (2011) - sample ERR026481

- Patient C2 (2016) - sample ERR026482

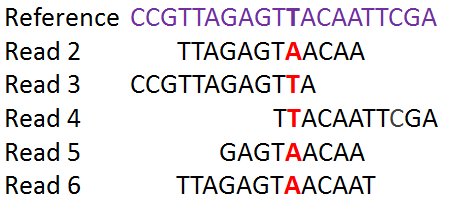

The second goal of this lab is to affirm or dispute the inferred transmissions by comparing the bacterial genomes with each other. We will do that by identifying SNPs between the genomes.

Getting your project started

Project organization is one of the most important parts of a sequencing project, and yet is often overlooked amidst the excitement of getting a first look at new data. Of course, while it’s best to get yourself organized before you even begin your analyses,it’s never too late to start, either.

Genomics projects can quickly accumulate hundreds of files across tens of folders. Every computational analysis you perform over the course of your project is going to create many files, which can especially become a problem when you’ll inevitably want to run some of those analyses again. For instance, you might have made significant headway into your project, but then have to remember the PCR conditions you used to create your sequencing library months prior.

Other questions might arise along the way:

- What were your best alignment results?

- Which folder were they in: Analysis1, AnalysisRedone, or AnalysisRedone2?

- Which quality cutoff did you use?

- What version of a given program did you implement your analysis in?

In this exercise we will setup a file system for the project we will be working on during this workshop.

Challenge

We will start by creating a sub directory that we can use for the

rest of the labs. First navigate to your own directory on Uppmax and

start an interactive session. Then confirm that you are in

the correct directory using the pwd command.

You should see the output:

OUTPUT

/proj/g2020004/nobackup/3MK013/<username>Exercise

Use the mkdir command to make the following

directories:

molepi

molepi/docs

molepi/data

molepi/resultsUse ls -R to verify that you have created these

directories. The -R option for ls stands for

recursive. This option causes ls to return the contents of

each subdirectory within the directory iteratively.

You should see the following output:

OUTPUT

molepi/:

data docs results

molepi/data:

molepi/docs:

molepi/results: Selection of a reference genome

Reference sequences (including many pathogen genomes) are available at NCBI’s refseq database

A reference genome is a genome that was previously sequenced and is closely related to the isolates we would like to analyse. The selection of a closely related reference genome is not trivial and will warrant an analysis in itself. However, for simplicity, here we will work with the M. tuberculosis reference genome H37Rv.

Download reference genomes from NCBI

Download the M.tuberculosis reference genome from the NCBI ftp site.

First, we switch to the data subfolder to store all our

data

Download reference genomes from NCBI (continued)

The reference genome will be downloaded programmatically from NCBI

with wget. wget is a computer program that

retrieves content from web servers. Its name derives from World Wide Web

and get.

Find the location of the gzipped fna file for that

reference genome via NCBI’s genome, by finding the FTP site and copying

the link to the *_genomic.fna.gz file and using that as an

argument to wget.

Search for Mycobacterium tuberculosis on the Datasets/Genome

website. Identify the “reference genome” (at the top), click on the

accession number, and find the FTP tab. There is many

files, among which

GCF_000195955.2_ASM19595v2_genomic.fna.gz. Right-click and

copy the link.

Download reference genomes from NCBI (continued)

This file is compressed as indicated by the extension of

.gz. It means that this file has been compressed using the

gzip command.

Extract the file by typing

Make sure that is was extracted

OUTPUT

GCF_000195955.2_ASM19595v2_genomic.fnaThe genome has 4’411’532 bp. The stat command of

seqkit is helpful.

OUTPUT

file format type num_seqs sum_len min_len avg_len max_len

GCF_000195955.2_ASM19595v2_genomic.fna FASTA DNA 1 4,411,532 4,411,532 4,411,532 4,411,532

Loops

Loops are key to productivity improvements through automation as they allow us to execute commands repeatedly. Similar to wildcards and tab completion, using loops also reduces the amount of typing (and typing mistakes). Our next task is to download our data (see the introduction to this episode) from the short read archive (SRA) at the European Nucleotide Archive (ENA). There are many repositories for public data. Some model organisms or fields have specific databases, and there are ones for particular types of data. Two of the most comprehensive are the National Center for Biotechnology Information (NCBI) and European Nucleotide Archive (EMBL-EBI). In this lesson we’re working with the ENA, but the general process is the same for any database.

We can do this one by one but given that each download takes about one to two hours, this could keep us up all night. Instead of downloading one by one we can apply a loop. Let’s see what that looks like and then we’ll discuss what we’re doing with each line of our loop.

BASH

for sample in ERR029207 ERR029206 ERR026478 ERR026474 ERR026473 ERR026481 ERR026482

do

echo -e "$sample"

wget --spider ftp://ftp.sra.ebi.ac.uk/vol1/fastq/"${sample:0:6}"/"${sample}"/"${sample}"_*.fastq.gz

doneWhen the shell sees the keyword for, it knows to repeat

a command (or group of commands) once for each item in a list. Each time

the loop runs (called an iteration), an item in the list is assigned in

sequence to the variable, and the commands inside the

loop are executed, before moving on to the next item in the list.

Inside the loop, we call for the variable’s value by putting

$ in front of it. The $ tells the shell

interpreter to treat the variable as a variable name

and substitute its value in its place, rather than treat it as text or

an external command.

In this example, the list is seven accession numbers of reads

belonging to the genomes we are interested in. Each time the loop

iterates, it will assign a sample name to the variable

sample and echo (print) the value of the

variable. In the second line, it will run the wget

command.

The first time through the loop, $sample is

ERR029207. The FTP site at EBI is constructed so that fastq

files are stored in a vol1/fastq subfolder. Read files are

not directly in that subfolder, but grouped in subfolders starting with

the six first characters of the accession number (ERR029 in

the first case), then in the accession number subfolder. To get to the

right place, the file path is constructed with the root path

(ftp://ftp.sra.ebi.ac.uk/vol1/fastq/), to which the first 6

characters of the accession number is added through that special

variable operation ("${sample:0:6}") to get to the right

subfolder. Finally, the correct accession subfolder

("${sample}"), the file name prefix

("${sample}"_*.fastq.gz") and the suffix

(_*.fastq.gz) are added. The * ensures that we

get reads from both ends.

Use {} to wrap the variable so that

.fastq.gz will not be interpreted as part of the variable

name. In addition, quoting the shell variables is a good practice AND

necessary if your variables have spaces in them.

For the second iteration, $sample becomes

ERR029206.

We added a --spider option to wget just for

this exercise, to retrieve only the names of the files and not the

actual file, to spare some time downloading files. The data is present

in the data/fastq subfolder of our group folder:

OUTPUT

ERR026473_1.fastq.gz ERR026474_2.fastq.gz ERR026481_1.fastq.gz ERR026482_2.fastq.gz ERR029207_1.fastq.gz

ERR026473_2.fastq.gz ERR026478_1.fastq.gz ERR026481_2.fastq.gz ERR029206_1.fastq.gz ERR029207_2.fastq.gz

ERR026474_1.fastq.gz ERR026478_2.fastq.gz ERR026482_1.fastq.gz ERR029206_2.fastq.gzFor more, check Bash Pitfalls

Follow the Prompt

The shell prompt changes from $ to > and

back again as we were typing in our loop. The second prompt,

>, is different to remind us that we haven’t finished

typing a complete command yet. A semicolon, ;, can be used

to separate two commands written on a single line.